Introduction – Welcome to the Black Box Era

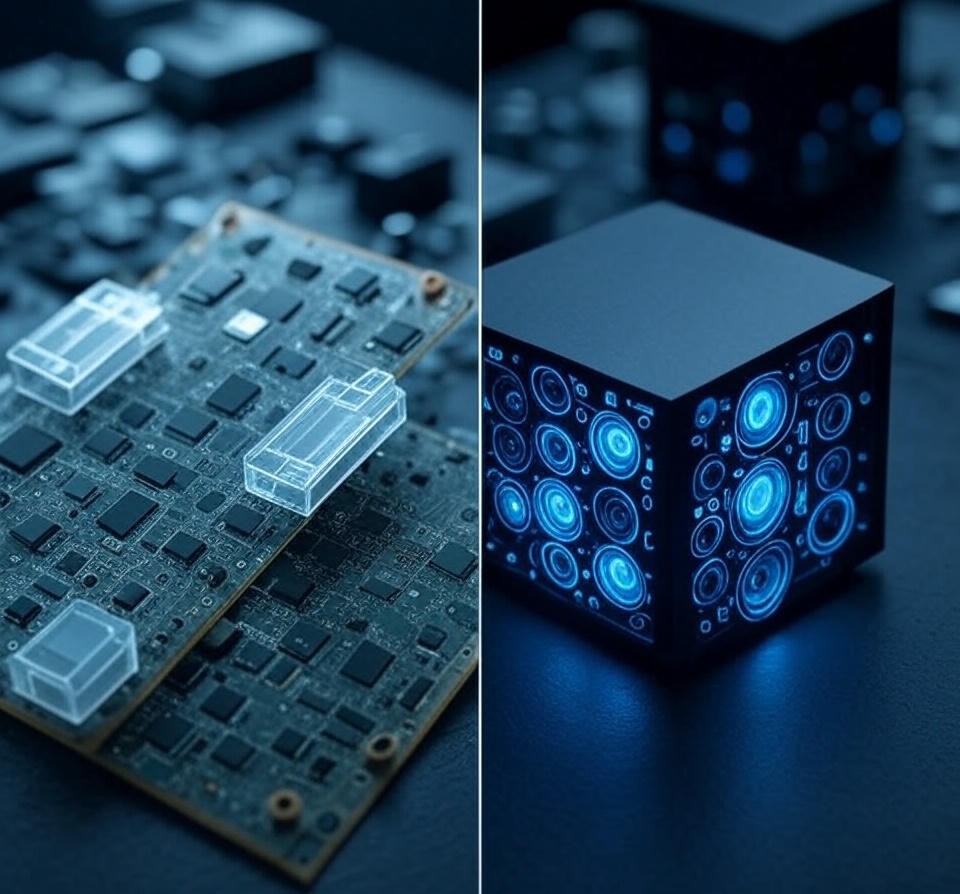

In the not-so-distant past, developers could open up a file, scan the code, understand its logic, and debug it line-by-line. But today, we’re rapidly entering a “Black Box Era”—a time when much of the software we depend on is wrapped in complexity or guarded behind proprietary walls.

From closed-source AI models to obfuscated cloud microservices, the transparency of code is fading. As engineers, we now interface with layers we can’t fully see, let alone understand. This shift has profound implications—not just for how we code, but for how we trust, audit, and innovate in a world dominated by systems we no longer fully control.

This article explores what the Black Box Era means for developers, product teams, and even end-users—and how we can adapt in a time when “running code” no longer guarantees knowing what it does.

What Defines a “Black Box” in Modern Tech?

In engineering and computer science, a black box refers to any system whose internal workings are not visible or accessible. You feed in an input, you get an output—but what happens in between remains hidden or unknowable.

In 2025, this black-box concept is no longer theoretical—it defines much of the software world.

Here are the most common examples of modern black boxes:

- Large Language Models (LLMs): Tools like GPT or Gemini are so complex that even their creators can’t fully explain why they generate certain outputs.

- Cloud Microservices: When using an API from AWS, OpenAI, or Google Cloud, you rarely see the code or infrastructure behind it.

- AI-Powered SaaS Platforms: Platforms claim “AI does the magic,” but the algorithms, data sets, and tuning often remain undisclosed.

- Closed Source Dependencies: Modern apps rely on dozens of libraries you don’t own, don’t see, and often don’t update yourself.

The black box isn’t always malicious—it’s often a byproduct of optimization, speed, and scale. But the trade-off is control and clarity.

⚙️ Section 3: Why Black Box Systems Are Booming

Black box systems aren’t just common—they’re exploding in popularity. And it’s not by accident.

Here are the driving forces behind their rise:

1. 🚀 Speed Over Transparency

Startups and corporations alike prioritize rapid deployment. It’s faster to plug into a prebuilt AI model or SaaS API than to build from scratch. Transparency takes a back seat to market speed.

2. 🌐 APIs & AI-as-a-Service

APIs for everything—from text generation to fraud detection—have enabled a plug-and-play development culture. Developers no longer need to “know” the internals. They just connect, pay, and use.

LLMs, CV models, and prediction engines are shipped as complete packages—zero visibility required.

3. 🔒 Proprietary Code & IP Protection

Big tech firms lock down their IP. The logic inside their services is guarded to protect revenue and competitive advantage. This creates a culture where opacity is the default.

4. 📦 Abstraction Layers Gone Wild

Frameworks now sit on top of frameworks, libraries on top of libraries. The modern stack is a skyscraper of black boxes—each hiding complexity to offer convenience.

5. 🧠 AI Models Are Inherently Opaque

Unlike deterministic algorithms, AI/ML models evolve from training data. You can’t inspect a neural net and “understand” its reasoning the way you might debug a loop in Python.

The result? Useful systems that can’t always be explained—even by their creators.

The boom of black box systems is rooted in efficiency, speed, and protection—often at the cost of understanding, auditability, and trust.

🔍 The Hidden Risks of Blind Trust

As we rush to adopt AI models, closed-source APIs, and layers of abstraction, we inherit more than just convenience — we also inherit risks we can’t always see or predict.

Let’s uncover the hidden costs of relying on black box systems:

1. 🧯 Debugging Becomes a Nightmare

When something breaks inside a black box, you can’t peek in to fix it. You’re left guessing. This slows down incident response and can paralyze entire systems if the provider doesn’t respond fast enough.

Imagine debugging a critical API call that silently fails — and the vendor’s docs haven’t been updated in 8 months.

2. 🕵️♂️ Security Through Obscurity ≠ Security

You can’t secure what you can’t audit. Blindly trusting a closed model to handle sensitive data can expose users to:

- Data leaks

- Privacy violations

- Regulatory non-compliance (GDPR, HIPAA, etc.)

True security requires transparency. But black boxes give you none.

3. 🧪 Unexplainable Behavior

AI models, especially large ones, can behave in ways that are:

- Unintended

- Biased

- Unethical

- Completely nonsensical

But if you can’t look inside, how can you improve or control that behavior?

Example: An AI resume screener rejecting all female candidates—and no one knowing why.

4. ⚖️ Ethical & Legal Blind Spots

- What if the model made a discriminatory decision?

- Who’s accountable?

- What if user data was used for training without consent?

Black box systems blur accountability. And as these tools are embedded deeper into decision-making pipelines, the stakes get higher.

5. 📉 Vendor Lock-In & Fragile Dependencies

You build on top of someone else’s system — and they:

- Raise prices

- Shut down

- Change behavior

- Suffer a breach

Now your product is in danger, and you’re helpless to fix it.

Every time we choose a black box for speed or simplicity, we trade away a bit of control, transparency, and safety.

The Developer’s Dilemma – Control vs. Convenience?

As AI agents and black-box solutions become more deeply embedded into our workflows, developers face a difficult question:

Do you value full control, or ultimate convenience?

For decades, developers have wielded total authority over every line of code they ship. With open-source tools and transparent architectures, we could debug, customize, and understand the complete picture.

But the new era of AI-driven tooling challenges that norm.

🧩 The Allure of Convenience

AI agents can save you hours — even days — of development time. They:

- Write boilerplate code,

- Recommend frameworks,

- Fix bugs before you spot them,

- Deploy updates automatically.

This convenience is seductive. For startups, solo devs, and tight deadlines, it’s a lifeline.

But it comes with a trade-off.

🔐 The Price of the Black Box

Many of these agents operate within closed models, opaque decision trees, or proprietary ecosystems. You may never fully understand:

- Why the AI chose a certain implementation,

- Whether it silently introduced a vulnerability,

- Or how to replicate the result without it.

This leaves developers dependent on tools they can’t audit — or worse, can’t question.

💥 The Risk of Skill Atrophy

As convenience rises, control declines. And so does developer intuition.

If you don’t need to understand the algorithm…

If you never write core logic yourself…

Will you lose the edge that makes you a true engineer?

This is not a futuristic fear — it’s already happening. Bootstrapped devs with AI assistants often ship MVPs without knowing how key components work.

🧭 Finding Balance

The best developers of 2025 won’t be those who abandon control for speed — nor those who reject automation to stay “pure.”

They’ll be the ones who know when to hand over the wheel, and when to grip it tightly.

The choice isn’t binary — it’s strategic.

What Developers Can Do Now

As the black-box era approaches, developers must take proactive steps to stay grounded and responsible in their work.

Blind trust in AI-generated code isn’t an option. While AI can significantly accelerate development, it can also introduce silent errors, security holes, or unethical behavior hidden deep within opaque logic. It’s on developers to retain oversight.

Here’s how to stay in control:

- ✅ Always review AI-generated code before committing. Treat it as a junior developer’s suggestion — helpful, but not blindly trustworthy.

- 🔍 Use tools that offer logging, explainability, and traceability. If you can’t audit what your AI agent is doing, you’re running a risk.

- 🧠 Promote interpretable AI models in your workflow — especially for mission-critical or user-facing applications.

- 📚 Stay informed about the ethical and technical risks of black-box systems. Following trusted newsletters, communities, and journals can help.

The future isn’t about replacing developers — it’s about evolving them. Staying human in the loop will define who leads and who lags.

🧩 Conclusion: Will You Adapt or Abdicate Control?

We are rapidly moving toward a future where code is generated, optimized, and deployed by systems we don’t fully understand. This is the black box era, where development shifts from writing logic to managing logic we didn’t author.

As developers, we face a choice:

- Remain passive and let convenience erode visibility and accountability, or

- Take charge by adapting, learning, and advocating for explainability, traceability, and responsible AI development.

The tools are changing. The roles are evolving. But the responsibility remains ours.

In the end, the best developers won’t be those who code the most — but those who understand the consequences of the code they run.

❓Frequently Asked Questions (FAQs)

1. What is a “black box” in software or AI?

A black box system is one where the internal logic or decision-making process is not visible or understandable to the user. In AI, this often refers to models like neural networks where it’s unclear how inputs lead to outputs.

2. Why is black box development a concern for developers?

Lack of transparency can lead to bugs, vulnerabilities, unethical decisions, and reduced trust — especially when AI agents make decisions you can’t trace or explain.

3. Can developers avoid using black box systems altogether?

Not entirely. Many tools and APIs already include black box elements. However, developers can choose to favor interpretable systems, demand explainability, and use logging/monitoring to retain oversight.

4. Are there tools that promote explainable AI (XAI)?

Yes, frameworks like LIME, SHAP, ELI5, and others provide insights into AI models. Some modern dev tools now include built-in XAI support.

6. How do I stay prepared for this new development era?

Keep learning. Use AI, but don’t rely blindly. Join communities, read trustworthy sources, and adopt tools that prioritize ethics, transparency, and traceability.