The Silent Technical Debt of Generative AI: Why Your Codebase is Becoming a Black Box

Table of Contents

Introduction: The Dopamine Hit of “Tab-Enter”

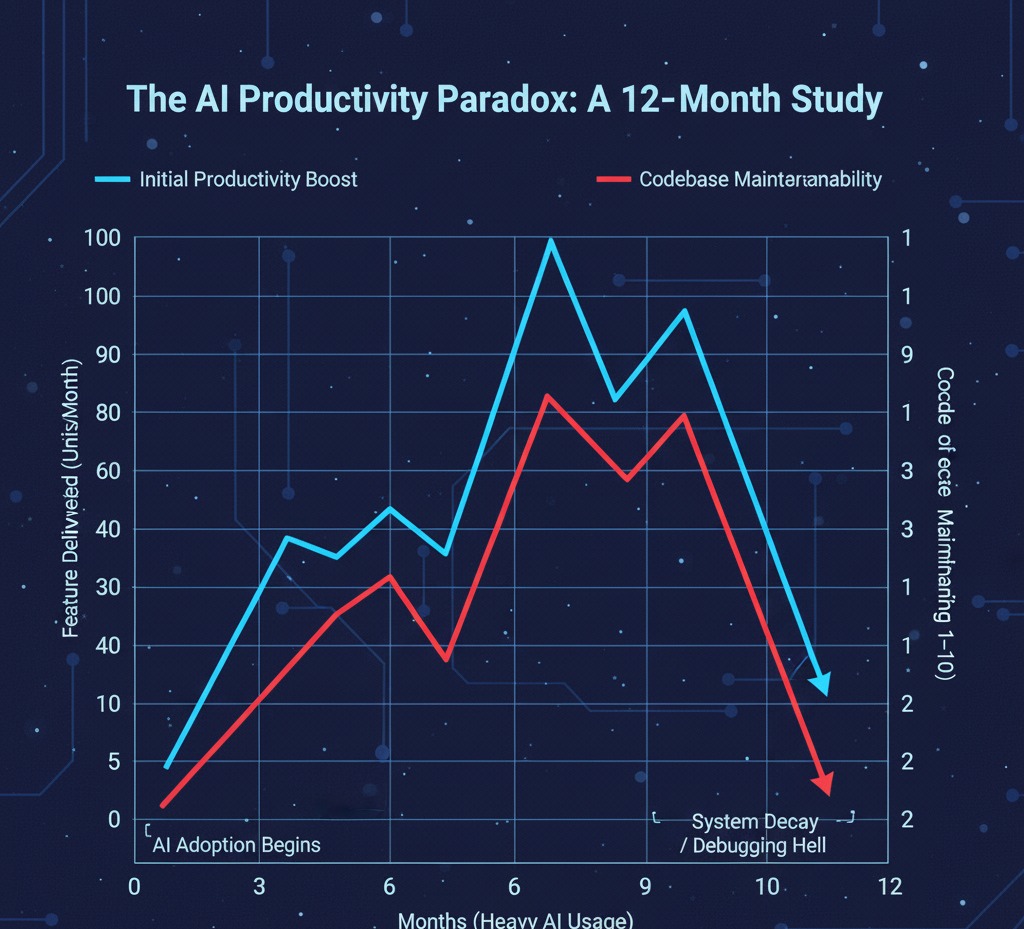

We are living in the era of the “10x Developer” myth reborn. With a simple press of the Tab key, tools like GitHub Copilot, ChatGPT, and Amazon CodeWhisperer conjure entire functions, complex regex patterns, and boilerplate classes into existence. The productivity metrics look incredible. Commits are up. Features are shipping faster. The dopamine hit of watching code materialize out of thin air is undeniable.

But at Dark Tech Insights, we don’t look at the commit graph; we look at the code rot underneath.

We are witnessing the mass production of “Black Box Code”—software that is written but not understood. We are trading long-term maintainability for short-term velocity, taking out a massive, high-interest mortgage on our codebases. The lender is an algorithm that doesn’t care about your architecture, and the currency is Technical Debt.

This post explores the ugly reality of AI-assisted programming: the erosion of deep knowledge, the rise of “spam code,” and why the next generation of legacy systems will be impossible to maintain.

1. The Illusion of Velocity: Code Volume vs. Code Value

In traditional software engineering, lines of code (LOC) is considered a vanity metric. Good engineers know that less code is often better—it means less to test, less to debug, and less to read.

Generative AI flips this logic on its head. LLMs (Large Language Models) are inherently verbose. They excel at adding, expanding, and generating. They are terrible at refactoring, deleting, or simplifying.

The “Spam Code” Phenomenon

When you ask an AI to solve a problem, it rarely suggests the most elegant architectural abstraction. Instead, it gives you the most statistically probable solution found in its training data. Often, this means:

- Verbose boilerplate instead of concise utility functions.

- Repetitive logic blocks instead of clean inheritance or composition.

- “Happy path” code that ignores complex edge cases.

The result is a codebase that grows in size exponentially. You aren’t building a skyscraper; you’re building a sprawling shantytown of code. It works for now, but the structural integrity is effectively zero.

2. The Cognitive Disconnect: Writing vs. Reading

There is a fundamental psychological difference between writing code and reviewing code.

When you write code manually, you are building a mental model. You understand the variable states, the loop boundaries, and the specific reason you chose a HashMap over a List. You possess Code Empathy.

When you accept an AI suggestion, you shift from Creator to Reviewer.

- The Reviewer Trap: Research shows that humans are cognitively lazy when reviewing content generated by an authoritative-sounding machine. We scan for syntax errors, not logical flaws.

- The Context Gap: You might accept a 20-line function because it passes the unit test. But you don’t have the deep memory of how it works. Six months later, when that function causes a race condition in production, you are debugging code that feels like it was written by a stranger.

The Dark Reality: We are filling our repositories with “Stranger Code.” We own the git blame, but we don’t own the logic.

3. The Security Minefield: Hallucinations and Vulnerabilities

Security is not just about intent; it’s about precision. AI models are probabilistic, not deterministic. They don’t “know” security best practices; they only know what patterns appear most frequently in their training data (which includes millions of lines of insecure, amateur code).

The “Hallucinated Package” Attack Vector

One of the darkest risks is Package Hallucination. AI models have been known to suggest importing libraries or packages that sound real but don’t exist. Attackers are now squatting on these predicted package names on npm and PyPI, injecting malware.

- Scenario: You ask Copilot for a “fast JSON parser for Node.js.”

- AI Suggestion:

import { fast-json-stream } from 'fast-json-stream-v2'; - Reality: That package didn’t exist until a hacker noticed AI was suggesting it, registered it, and filled it with a crypto-miner. You just

npm installeda Trojan horse because the AI told you to.

The Hardcoded Secret

Despite filters, AI models still occasionally reproduce API keys, hardcoded passwords, or insecure hashing algorithms found in public repositories. A developer moving at “AI speed” might commit these secrets before a linter catches them.

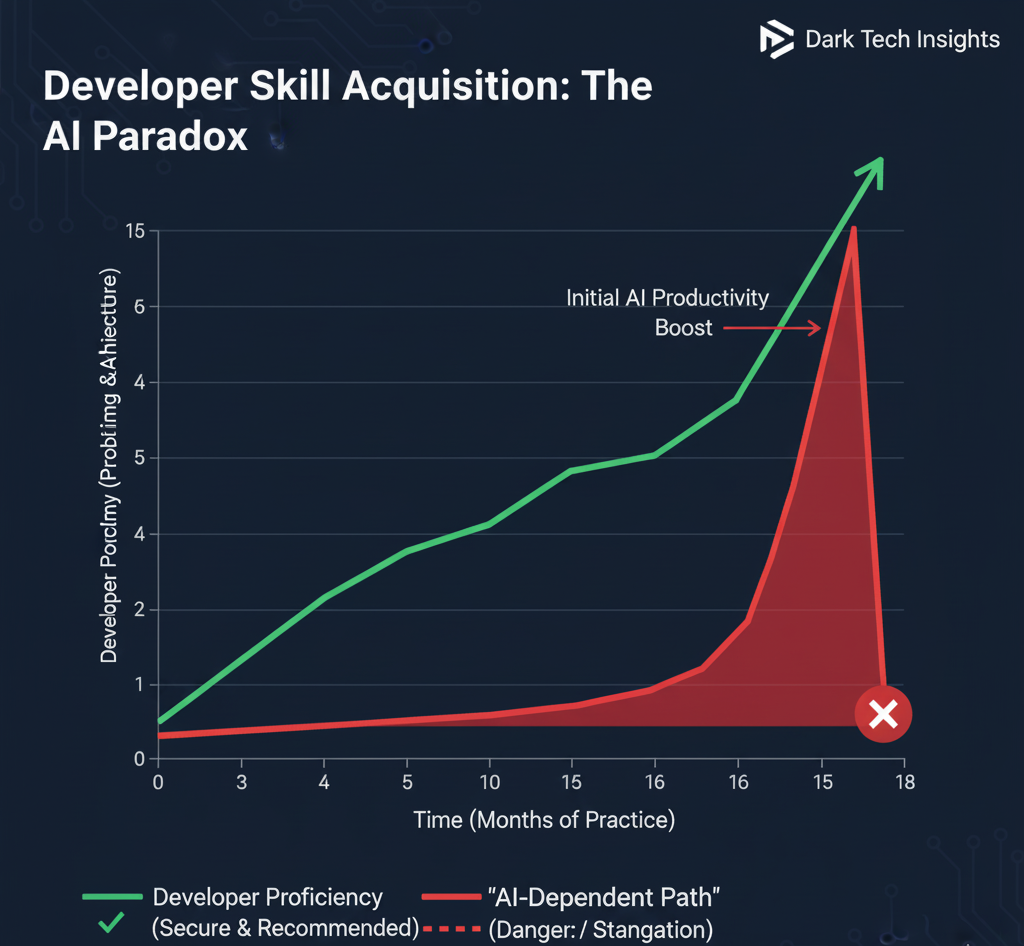

4. The Deskilling of the Developer

This is the most controversial point, and the one most seniors are afraid to discuss. Are we raising a generation of “Prompt Engineers” who cannot code without a net?

The “Stack Overflow” Effect on Steroids

In the past, copying from Stack Overflow required you to at least read the thread, understand the context, and adapt the variable names. It was a learning process. AI removes the friction of learning. It gives the answer without the why.

The Junior Trap

Junior developers need struggle. They need to bang their heads against syntax errors and logic flaws to build the neural pathways that make them Senior Engineers.

- If a Junior uses AI to bypass the struggle, they never learn system design.

- They become excellent at describing a problem to a bot, but helpless at fixing the problem when the bot fails.

The Future Risk: In 5 years, we may face a massive talent shortage of Senior Engineers who actually understand how the underlying systems work, because the current Juniors never learned the foundations.

5. The Legal and Compliance Gray Zone

If your AI assistant generates code that is verbatim identical to a GPL-licensed block of code from a protected open-source project, and you ship that in your proprietary SaaS product, you have just infected your entire codebase with a viral license.

- The Black Box of IP: You do not know the provenance of the code you are accepting.

- The Lawsuit of Tomorrow: Companies are currently flying blind. When the first major class-action lawsuit hits regarding AI-generated copyright infringement, thousands of startups will realize their entire codebase is legally radioactive.

6. Conclusion: Reclaiming the Craft

The argument here is not to ban AI. That is Luddism. The argument is to treat AI with the suspicion it deserves.

AI is not a “Pair Programmer.” A pair programmer has a conscience, a memory, and a fear of being fired. AI has none of these. It is a Stochastic Parrot—it repeats patterns without understanding meaning.

To survive the “Dark Side” of AI coding:

- Review Harder: AI code requires more scrutiny than human code, not less.

- Trust Zero: Assume every AI suggestion contains a security flaw until proven otherwise.

- Learn the Fundamentals: Use AI to automate the boring stuff, but never use it to skip learning the hard stuff.

If we don’t discipline ourselves now, the future of software isn’t a bright, automated utopia. It’s a bloated, insecure, unmaintainable balls of mud that no one knows how to fix.

❓ Frequently Asked Questions (FAQs)

1. Isn’t AI code better than “Spaghetti Code” written by bad developers?

Not necessarily. AI often generates “Spaghetti Code” much faster than a human can. While the syntax might be cleaner, the logic can be disjointed. A bad human developer writes bad code at 10 lines per hour. An AI writes bad code at 1,000 lines per hour. The scale of the mess is the problem.

2. Can’t we just use AI to fix the code it wrote?

This is a dangerous loop called “Model Collapse.” If you use AI to write code, and then AI to debug it, you are removing human judgment entirely. AI struggles to understand broad system architecture. It might fix a syntax error while introducing a logical error that destroys your data integrity.

3. Will AI replace developers entirely?

No. It will replace coders. The “Coder” translates requirements into syntax. The “Software Engineer” solves problems and manages complexity. AI kills the “Coder” role but makes the “Software Engineer” role harder and more critical. The value shifts from knowing syntax to knowing architecture.

4. How do I prevent “Package Hallucination”?

Always verify imports manually. Go to the package registry (npm, PyPI, Maven) and confirm the package exists, has a recent update history, and has a healthy number of downloads. Never blindly run npm install on an AI suggestion.

5. What is the “Flip Side” benefit mentioned in the blog concept?

The flip side is that developers who resist the urge to auto-generate everything will become incredibly valuable. If you are the only person who actually understands how the memory management in your app works (because you didn’t outsource it to Copilot), you become the indispensable “fixer” when the AI-generated systems break.