Table of Contents

Introduction — The Hidden Costs of AI Automation

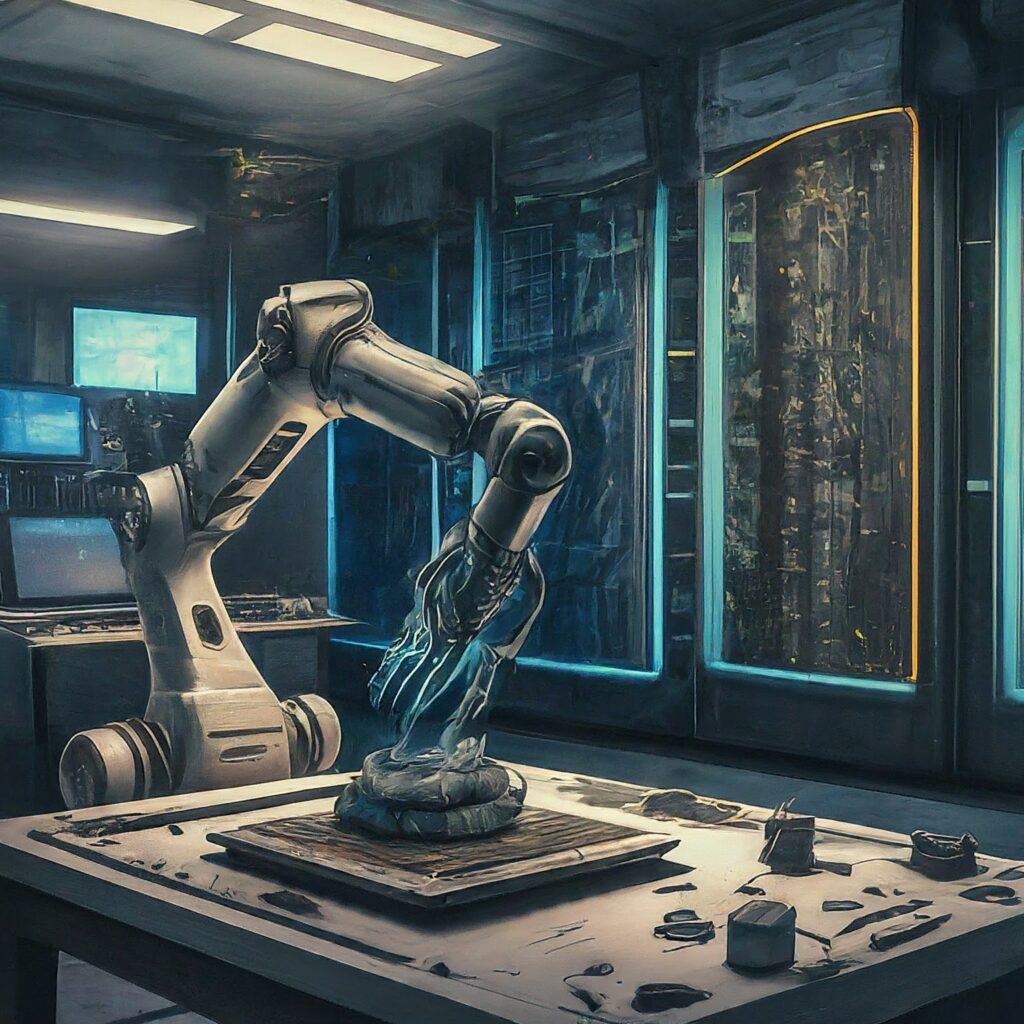

AI automation has become the golden child of modern technology. From self-driving cars to advanced chatbots, it promises efficiency, cost reduction, and productivity like never before. Companies worldwide are adopting AI to optimize workflows, automate repetitive tasks, and even replace decision-making roles. At first glance, this looks like a technological utopia — machines doing the boring work while humans focus on creativity and innovation.

But behind the excitement lies a less glamorous reality. The unintended consequences of AI automation are slowly surfacing, and they’re not just limited to job displacement. Entire industries are shifting, new forms of inequality are emerging, and society is grappling with ethical dilemmas that we haven’t yet solved. The same tools designed to empower us could very well reshape our future in ways we didn’t anticipate.

In this article, we’ll dive deep into these overlooked consequences. From hidden environmental costs to ethical blind spots, we’ll explore how the AI-driven world is changing not just our workplaces but also our social structures and personal lives. Understanding these darker aspects of AI automation is essential if we want to strike a balance between technological progress and human well-being.

Job Displacement Beyond Blue-Collar Work

When people think about automation and job loss, the first image that comes to mind is often factory workers being replaced by machines. For decades, industrial robots have been automating repetitive physical labor, and the assumption has been that white-collar or knowledge-based jobs were safe. But AI has shattered that notion. In 2025, automation isn’t just taking over assembly lines — it’s reshaping careers in journalism, law, finance, customer service, and even creative fields like design and coding.

Take, for example, AI-powered legal assistants that can scan thousands of pages of case law in seconds or financial bots capable of analyzing markets more efficiently than seasoned traders. What once required human expertise is increasingly being handled by algorithms that never sleep, never tire, and rarely make errors in repetitive tasks. Even in creative industries, generative AI tools are producing artwork, music, and code that rival human contributions. This shift means that white-collar professionals — traditionally considered safe from automation — are facing the same risks factory workers did decades ago.

The bigger challenge lies in how society adapts to these changes. Retraining programs and upskilling efforts are being promoted, but the reality is that not everyone can pivot into entirely new careers at the same pace technology evolves. This leads to what economists call a “skills gap” — a situation where displaced workers struggle to stay relevant in a job market dominated by AI. Without proactive policies and long-term strategies, entire middle-class segments risk being hollowed out, creating social and economic divides that are far deeper than anything we’ve seen in the industrial age.

The Environmental Toll of AI Automation

AI automation is often framed as a clean and futuristic solution to modern inefficiencies, but the reality is that it comes with a heavy environmental cost. Every time an AI system processes data, trains a new model, or runs large-scale automation tasks, it consumes enormous amounts of energy. Data centers — the backbone of AI infrastructure — are now some of the largest consumers of electricity worldwide. With AI adoption growing at exponential rates, the strain on global energy grids is intensifying, raising questions about whether our technological progress is sustainable.

The environmental toll doesn’t stop with energy consumption. Cooling massive server farms requires millions of liters of water, further straining resources in regions already struggling with scarcity. Additionally, the constant push for faster, more powerful AI chips fuels electronic waste as outdated hardware is discarded. Ironically, while many AI systems are marketed as tools to help companies achieve “green efficiency,” their underlying infrastructure contributes to the very problems they claim to solve. In effect, AI automation could accelerate climate concerns if left unchecked.

This creates a paradox: the same technology designed to optimize supply chains, reduce emissions in logistics, and design sustainable products is itself a significant polluter. Governments, corporations, and researchers must now grapple with how to mitigate AI’s environmental footprint. The solutions might include transitioning data centers to renewable energy, improving chip efficiency, and enforcing stricter regulations on e-waste management. But until such measures are widely implemented, the ecological cost of AI automation remains one of its most overlooked and unintended consequences.

We have pdf for potential readers for more details,see the pdf below

Ethical Blind Spots and Algorithmic Bias

AI automation doesn’t just replace jobs or consume resources — it also brings forward deep ethical dilemmas. One of the most pressing issues is algorithmic bias. Since AI systems are trained on historical data, they often inherit the prejudices and inequalities present in that data. This means automated hiring systems may unintentionally discriminate against candidates from certain backgrounds, predictive policing tools may unfairly target minority communities, and AI-driven loan applications may disadvantage people based on income or demographics. Instead of eliminating bias, AI can amplify it at scale, making invisible discrimination harder to detect but far more impactful.

The ethical challenge doesn’t stop there. AI automation also raises questions about accountability. When a human makes a mistake, responsibility is traceable, but when an AI system makes a harmful decision, who is to blame — the programmer, the company, or the algorithm itself? This “accountability gap” creates a dangerous loophole where harmful outcomes can occur without anyone being held responsible. In fields like healthcare, law, and criminal justice, such gaps can have life-altering consequences.

What makes these blind spots even more concerning is how little attention they often receive compared to AI’s efficiency benefits. Many organizations adopt AI systems quickly to stay competitive, skipping over ethical audits or fairness checks in the process. By prioritizing speed and cost savings, businesses risk embedding systemic inequalities into their operations. Addressing these blind spots requires not just technical fixes, but also transparent regulations, ethical guidelines, and diverse teams to ensure AI automation serves everyone fairly. Without this, automation risks becoming a silent enforcer of injustice under the guise of progress.

Psychological and Social Impacts of AI Automation

Beyond economics and ethics, AI automation is quietly reshaping human psychology and social behavior. For many workers, automation creates an underlying sense of insecurity — the fear that one day their role will be rendered obsolete. Even if employees keep their jobs, the knowledge that AI could replace them at any moment can lead to anxiety, burnout, and declining job satisfaction. This “automation stress” is not limited to factory workers; even professionals in healthcare, law, and education are experiencing heightened uncertainty about their long-term career stability.

On a societal level, the rise of AI automation is changing how we perceive identity and purpose. Work has long been tied to personal value and contribution to society, but when machines take over core responsibilities, people may struggle to find meaning in their roles. For some, this results in disengagement and a sense of redundancy, while for others it sparks a drive toward creative or entrepreneurial pursuits. Either way, AI automation is pushing society into uncharted psychological territory, where humans must redefine their sense of worth beyond traditional career paths.

Social dynamics are also shifting. As some groups adapt quickly and thrive in an AI-driven economy, others are left behind, deepening class divides and fueling resentment. This polarization can foster distrust toward technology and even toward those who benefit from it. If left unaddressed, such divides could erode social cohesion and create cultural backlash movements against automation. Understanding and mitigating these psychological and social impacts is just as crucial as solving the technical or ethical challenges — because without addressing the human side, automation risks alienating the very people it’s supposed to empower.

Economic Inequality and the Power Shift

AI automation isn’t just changing jobs — it’s redistributing economic power in ways that favor a small group of corporations and individuals. The companies that own the largest AI models and the most advanced automation systems are consolidating their control over global markets. Tech giants with the resources to build massive data centers and hire elite AI talent are pulling further ahead, while smaller firms struggle to keep pace. This concentration of power mirrors the industrial revolution, but on a digital scale where wealth and influence are even more unevenly distributed.

At the same time, automation is squeezing the middle class. Highly skilled workers in AI, robotics, and data science are thriving, but millions of traditional roles are being automated away with no clear replacement. The result is a widening gap between those who benefit from automation and those left behind. Instead of creating broad prosperity, unchecked AI adoption could deepen economic inequality and fuel resentment toward both corporations and governments that fail to address the fallout.

This power shift also has geopolitical consequences. Countries that dominate AI development — such as the U.S. and China — are gaining unprecedented leverage over global economies. Nations without advanced AI infrastructure risk becoming dependent on foreign technologies, further widening global inequality. Unless policies are crafted to ensure fair distribution of automation’s benefits, we may see the rise of a digital elite controlling not just industries, but the very structure of future societies. AI has the potential to democratize opportunity, but without intervention, it may just reinforce existing hierarchies.

Dependence, Security Risks, and the Fragile Future

As AI automation becomes deeply embedded in industries, governments, and daily life, our dependence on these systems grows stronger. From self-driving logistics fleets to AI-powered financial trading platforms, society is increasingly reliant on algorithms to keep essential operations running smoothly. While this dependency brings efficiency, it also creates fragility. If critical systems fail, get hacked, or behave unpredictably, the fallout could be catastrophic — disrupting supply chains, collapsing markets, or even endangering lives in healthcare and transportation.

Cybersecurity experts warn that automation introduces new attack surfaces. AI-driven systems are not immune to manipulation; in fact, they can be exploited in ways traditional systems cannot. Adversarial attacks — where malicious actors subtly alter inputs to trick AI — highlight just how vulnerable automation can be. Imagine a manipulated traffic dataset confusing an autonomous fleet of trucks, or an AI-powered stock trading system being nudged into creating market chaos. The more automated our world becomes, the greater the risks of cascading failures triggered by even small vulnerabilities.

This growing fragility highlights a paradox: the more efficient and advanced AI makes our systems, the more brittle they may become under stress. Dependence on automation reduces human oversight and resilience, leaving societies exposed to large-scale disruptions. Building safeguards like “human-in-the-loop” oversight, diversified backup systems, and transparent AI monitoring will be essential to prevent disasters. Without these precautions, the promise of automation could quickly turn into its biggest liability — a fragile future where the collapse of one AI system can ripple across entire economies and nations.

Conclusion — Balancing Progress With Responsibility

AI automation is not inherently good or bad — it’s a tool, and like all powerful tools, its impact depends on how we use it. The unintended consequences we’ve explored — from job displacement and environmental costs to ethical blind spots, psychological strain, inequality, and security risks — highlight the complexity of automation’s influence on society. Ignoring these challenges while racing toward efficiency and productivity could lead to long-term instability, both socially and economically.

The future of AI automation requires balance. Innovation should continue, but it must be coupled with responsibility. Companies must adopt ethical AI practices, governments need to enforce clear regulations, and society as a whole must redefine what work, value, and purpose mean in an age where machines can replicate human effort. Education, reskilling, and inclusivity will play a vital role in ensuring that the benefits of automation are shared, not hoarded.

Ultimately, AI automation has the potential to be humanity’s most powerful ally — but only if we acknowledge its risks and actively work to mitigate them. Instead of blindly celebrating efficiency, we must ask harder questions: Who benefits? Who is left behind? And how can we ensure that progress uplifts rather than divides? The answers to these questions will determine whether AI automation shapes a future of shared prosperity or one of growing fragility.

Frequently Asked Questions (FAQs)

1. What are the main unintended consequences of AI automation?

The most common unintended consequences include job displacement beyond traditional industries, environmental strain from data centers, ethical biases in algorithms, psychological stress for workers, and growing economic inequality.

2. Does AI automation only affect low-skill jobs?

No. AI automation is increasingly impacting white-collar jobs in law, finance, journalism, customer service, and even creative industries like art and coding. High-skill workers are also at risk of displacement.

3. How does AI automation harm the environment?

Training and running AI models require enormous amounts of energy and water to cool data centers. This contributes to carbon emissions, electronic waste, and resource depletion, making AI less sustainable than many realize.

4. Can society adapt to the risks of AI automation?

Yes — with the right policies, education, and oversight. Reskilling workers, regulating AI for fairness, investing in renewable-powered data centers, and maintaining human oversight can help mitigate risks.

5. Will AI automation replace humans completely?

Unlikely. While AI will handle many tasks, humans bring creativity, empathy, and ethical judgment that machines cannot replicate. The future is more likely to be one of collaboration — where humans and AI work together.